Introduction

Recently, I’ve developed a habit of waking up earlier than usual, 7:00 AM sharp. I’m still getting used to it, and most mornings I wake up groggy and low on energy (especially during winter, when it’s still pitch black outside).

One thing that’s helped lift my spirits during these sluggish starts is playing some jazz, lo-fi hip-hop, or another instrumental tune on the smart TV in my living room. The combination of soothing music and cozy visuals, often landscapes or soft illustrations, makes those early hours feel just a little less miserable.

Usually, I’ll get out of bed, get dressed, and do the things one usually does in the morning before turning on the TV and searching for a video on YouTube. But sometimes the remote is nowhere to be found. And even when it is, typing a video title on a clunky virtual keyboard with a four-directional remote feels like too much effort. On those days, I skip the music and just push through my morning.

As the mornings passed, the annoyance of going through my “music ritual” slowly grew, eventually surpassing the discomfort of facing them in eerie silence. Eventually, I decided it was time to fix this pain point in my life the only way I know how: with a software project that ended up taking way too much time.

Doing Things The “Smart” Way (SmartThings)

When the ideas on how to solve this challange first flooded my mind, I was quick to shut them all down. I’m already juggling too many other things in life, I couldn’t afford to dive into yet another side project. “Don’t be a hero,” I told myself. “Just use the native Samsung IoT functionality.”

When I bought my TV (a Samsung TU50U8005FUXXC model) I didn’t think much about it. I’m not exactly a smart TV enthusiast, so as long as it wasn’t flooded with ads, I was fine with it. I didn’t even give a second thought to the IoT ecosystem or the operating system.

As I’ve come to realize, though… it sucks. The TV hardware itself seems decent enough, but the software is a mess. The Tizen OS is full of bloat and offers a terrible development experience (sideloading third-party apps is a nightmare). And since it’s not Android-based, finding apps for it is a pain.

I wasn’t impressed with the IoT ecosystem either. To be honest, I’ve never really delved into IoT before, so I didn’t have much to compare it to. Still, I figured that something as simple as “play this video on YouTube at 7:00 AM every day” should be a no-brainer to automate. I mean, this has to be one of the most basic IoT use cases, right?

That’s when I turned to SmartThings, Samsung’s own home automation solution. I downloaded the app (which, by the way, has some pretty concerning privacy policies and app permissions) and connected it to my TV, only to be, once again, underwhelmed.

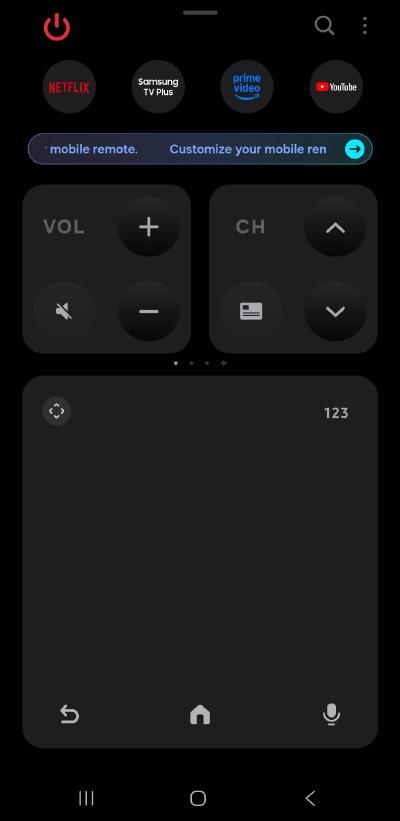

The first thing I noticed was that I could use my phone as a virtual remote, which was kind of cool. A promising start.

Using phone as TV remote.

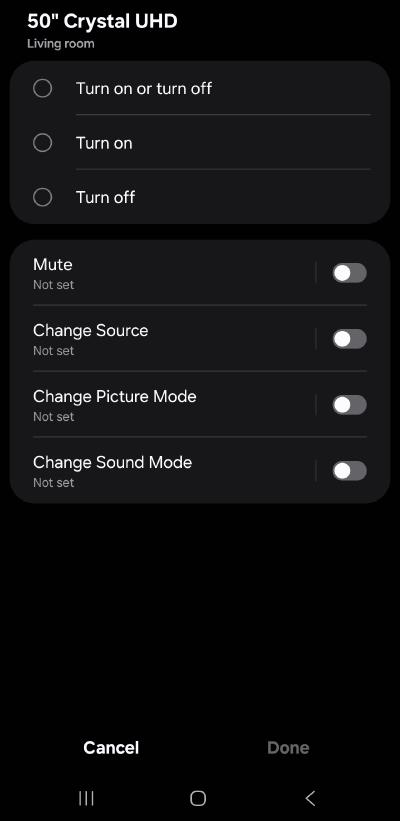

Next, I stumbled upon the “Routines” section, which allows you to trigger a set of actions based on certain events (like the time of day). This was exactly what I was looking for. But I was pretty disappointed to find that the only actions available for my TV were to turn it on and off.

TV automation actions. Just turning it on and off!

I searched high and low for a solution, convinced there had to be a native way to automate this. But nope. Absolutely nothing. How is this not a basic feature? At that point, enough was enough. I wasn’t going to just accept this glaring oversight, so I decided it was time to waste yet more of my life on yet another Python project.

Doing Things The Hacky Way

The How

While my experience with the SmartThings app was underwhelming, I did discover that my smartphone could act as a remote for my TV. This suggested that the TV might be exposing some sort of API, maybe via HTTP or WebSockets, allowing a client to send commands just like a regular remote would. This was promising, because if my phone could make those requests, surely I could write some software to mimic them.

A quick search on GitHub confirmed my suspicions. Not only was there a WebSocket API for the TV, but someone with the handle xchwarze had gone through the trouble of reverse-engineering it and created a Python wrapper called samsung-tv-ws-api!

After some light testing, I was pleased to find that the library actually worked with my TV model. That was all the confirmation I needed, I was confident this automation project was finally going to happen.

There was, however, one major limitation that would constrain the entire solution. While I now had the ability to programmatically control everything a remote could do, the feedback from the TV was almost non-existent. In other words, the Python code could send commands, but there was essentially nothing it could receive from the TV. This meant that whatever automation I built would have to be completely blind to the world, just sending commands without any feedback to confirm if things were working as expected.

Despite this, I managed to design a pretty solid solution with the limited tools at my disposal.

The Plan

I quickly outlined the following procedure for the script to follow:

- Turn on the TV at 06:55 AM

- Reduce the volume to zero

- Open the YouTube app

- Navigate to and select the search bar

- Type the title of a random video from a whitelist

- Search for the video and select a random option from the first five results

- Restore the volume to a comfortable level

- Play the video, ensuring the music is playing by 07:00 AM

This way, when my alarm goes off at 7:00 AM and I step out of the room, I’ll be greeted in the living room by the TV already softly playing a tune!

The procedure itself was straightforward enough to outline, but the real challenge came in implementing it while considering the “blindness” of our agent. Since we can’t get feedback from the TV, the script needs to be designed with actions that are correct regardless of the TV’s initial state. In other words, it has to be self-correcting and deterministic.

As explained, this software must work by emulating the remote controller, meaning that all actions are limited to what’s possible with a physical remote. We’ll be using the following commands:

- Cardinal movement (

↑ UP,↓ DOWN,← LEFT,→ RIGHT) - Enter (

✔ Enter) - Return (

↩ RETURN) - Volume up and down (

+ VOLUME UP,- VOLUME DOWN)

Another issue I encountered during development was socket connection timeouts between the client and the TV. This would often happen twice in a single run due to two longer “waits” in the code. So, it was crucial that the software be fault-tolerant and capable of recovering from these sudden exceptions.

The architecture I ended up going with has three parts:

- A

generate_actions.pyPython script, responsible for computing the list of actions to be sent to the TV and saving them to anactions.lsttext file. Each line in this file represents an action to be taken by the agent, FIFO. - A

execute_actions.pyPython script, which reads the top action fromactions.lst, executes it, and then removes it from the file before moving to the next one. If any exceptions occur, the script terminates. - A

run.shBash script, which runsgenerate_actions.pyonce at the start, then runsexecute_actions.pyup to 10 times if it fails.

This architecture allows for better control over the process and ensures that the automation has a higher chance of success, even if things go wrong.

The Problems

There were several small roadblocks along the way that needed to be solved to get the automation working (almost) 100% of the time. Here are some of the most noteworthy ones.

The Volume

The TV’s volume is an important variable in this process. It’s possible that whoever used the TV last left the volume too low or too high for my early-morning needs. Additionally, while navigating the YouTube app, there’s a chance it could play sounds (like ads or video previews) before reaching the video. To avoid that, I needed the volume at zero until just before the video plays.

This problem is a great study-case to understand the “blind agent” challenge: via the API, we can programmatically increase or decrease the volume, but we can’t set an exact level directly, nor can we query the TV for the current volume. Yet, we need to ensure that our script always puts the TV at the desired volume, regardless of the TV’s starting state.

The solution here is simple: Since the volume can be set at a maximum of 100, we can send the TV 100 - VOLUME DOWN commands to surely bring it down to 0. Then, we send + VOLUME UP commands for the desired level we want.

The downside is that there’s a delay between each command and its confirmation, so sending 100 - VOLUME DOWN commands is slow. To overcome this, I execute the volume decrease commands in a separate thread, allowing the process to continue while the volume is gradually decreased to 0.

The Initial State Problem

This one took a bit more thought to solve. When the script opens the YouTube, the app remembers the state it was left off from the last session. So, if someone was watching a video when they turned off the TV, the app will resume right on the page where they left off. On top of that, sometimes the app will randomly prompt us to choose a user profile before loading the main interface, adding another layer of inconsistency to what the user interface will look like once we the app is opened.

Since we don’t know the user interface layout nor where the cursor is when the app starts, it becomes difficult to reliably navigate the cursor to the search bar. For the script to work, we need to ensure that the cursor always starts from the same place, regardless of what happened the last time the app was used, since we must send a sequence of actions that assumes a starting cursor position.

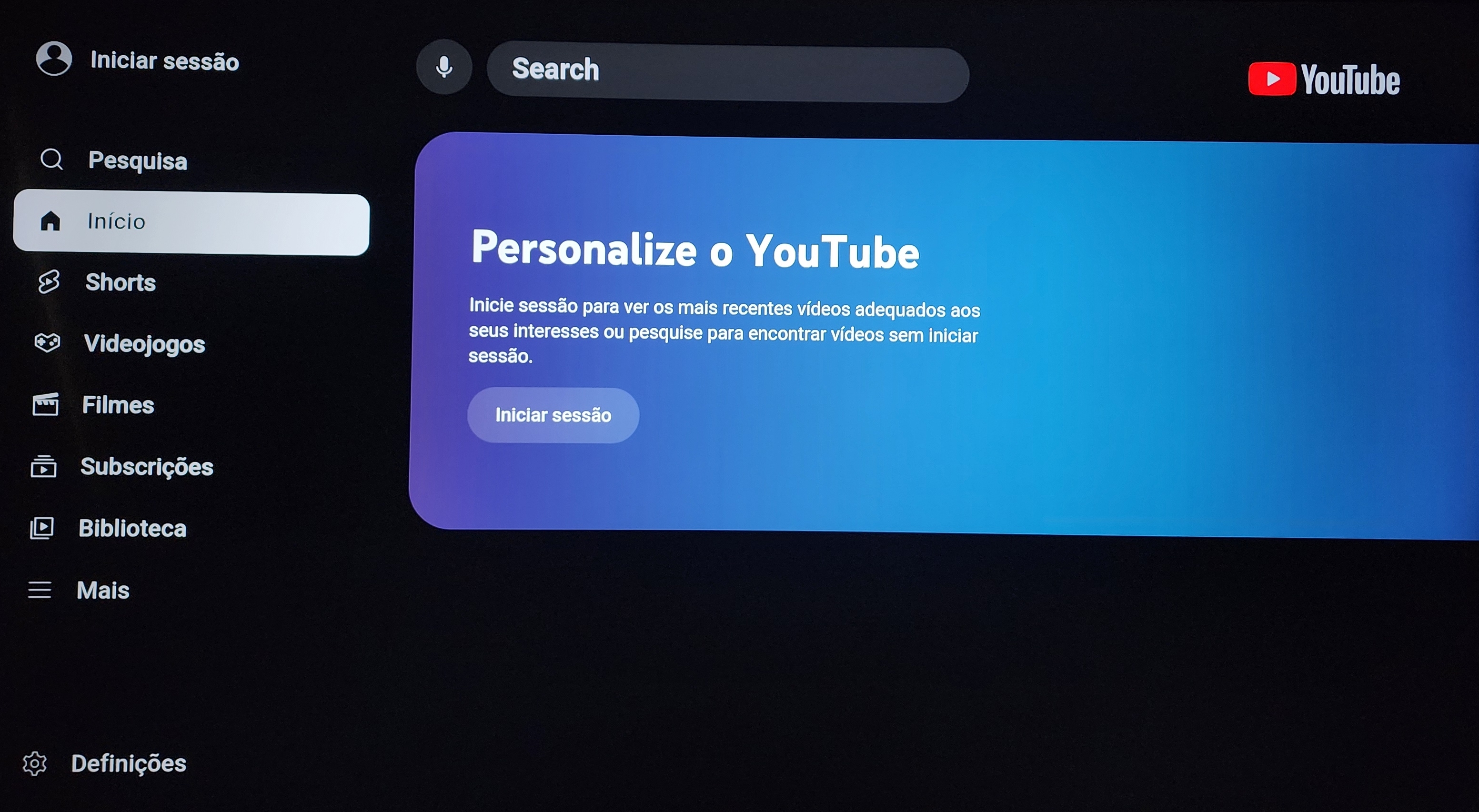

The solution I came up with is simple but effective: we send a series of ↩ RETURN commands to the TV. At least five, but the exact number doesn’t matter. No matter where the cursor is, if we back out a few times, we’ll inevitably land on the YouTube home page with the cursor on the “Home” tab.

Now, you might think that we don’t know exactly how many ↩ RETURN commands it will take to get to the home page, which could leave us in an unpredictable state. But here’s where the trick comes in: if we send more ↩ RETURN commands than necessary, we might end up on one of two possible states:

- The “Home” page, with the cursor on the “Home” tab.

The home page.

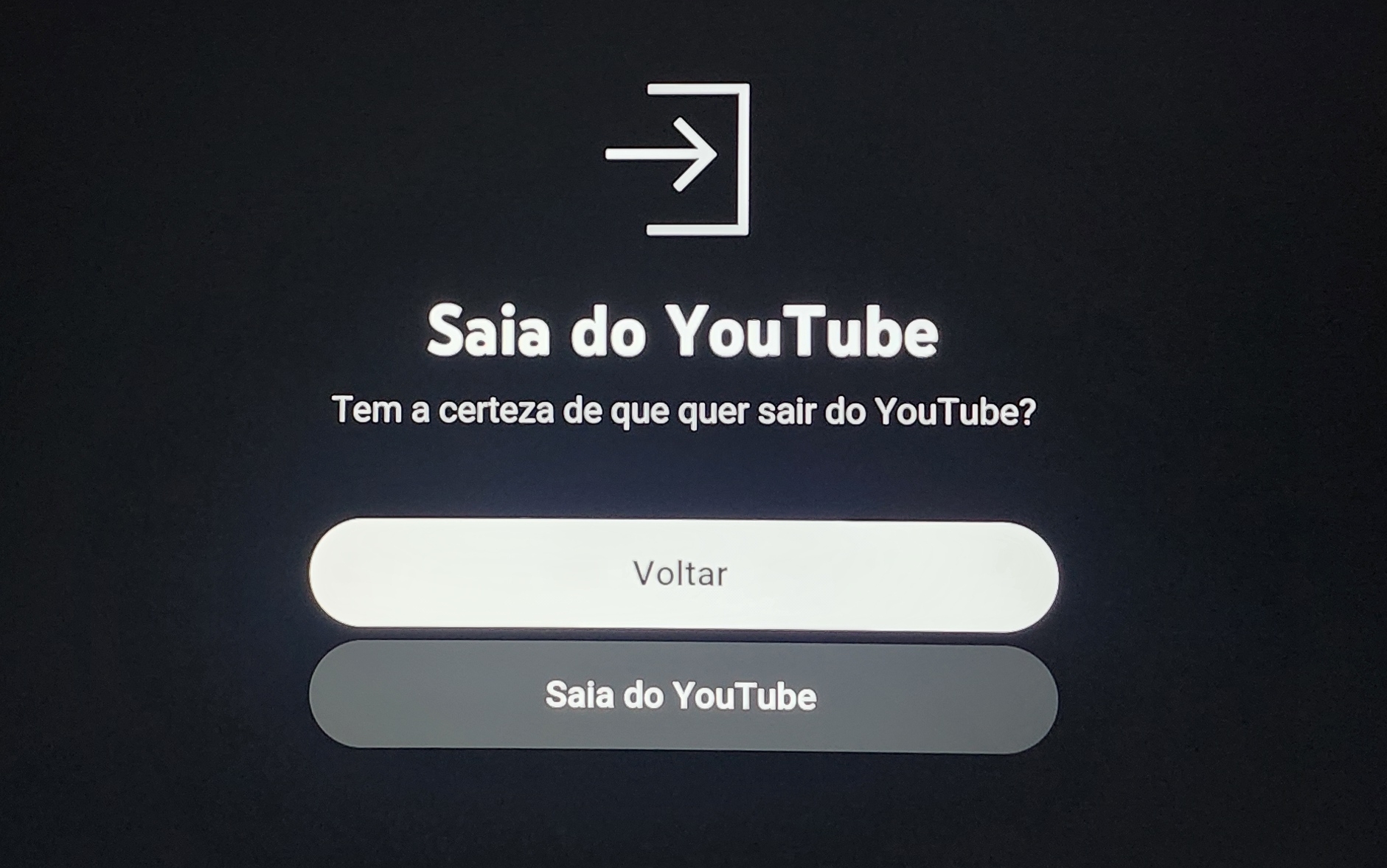

- A modal asking if we are sure we want to log out, with the cursor on the “Back” button.

The exit confirmation modal

At first, this seems like a problem, since we don’t know in which of the two state we’ll land in and, as we’ve seen, we need that consistency to go from there. However, we can use a clever workaround: there’s a command, the ✔ Enter command, that will bring us to the same state regardless:

- If we land on the logout modal with the cursor on “Back”, pressing

✔ Enterwill take us back to the home page. - If we’re already on the home page, pressing

✔ Enterdoes nothing because the cursor is already on the “Home” tab.

This way, no matter whether we end up on the “Home” tab or the logout prompt, the ✔ Enter command release us from this state superposition and collapse on a single point: the YouTube home page.

In sumamry, by using this simple trick, we can ensure that this sequence of commands will always land us in the same place, no matter what the initial state of the YouTube app was. From there, we can reliably issue the rest of the commands to navigate from the “Home” tab to the “Search” input field and continue from there.

The Virtual Keyboard Problem

Once we arrive at the “Search” input field, we can start typing the title of the video we’d like to find. One important limitation is that the API wrapper, just like the physical remote that came with the TV, cannot issue commands for specific letters. Instead, we can only move in the four cardinal directions and press Enter.

To type out a search term, we must therefore navigate a cursor across a virtual keyboard and select each letter one by one. In theory, I could manually figure out the exact sequence of commands needed to spell a few video titles I’m interested in and hardcode them into the script. However, I’d rather have the flexibility to simply provide a list of strings as video titles and let the code figure out the sequence of commands on its own. So… let’s over-engineer this a little.

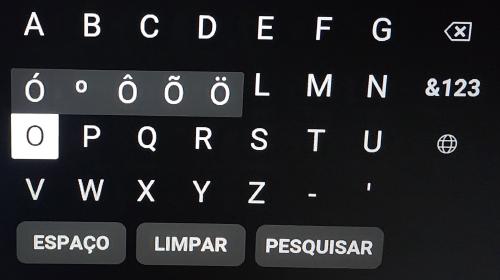

The virtual search keyboard

The YouTube virtual keyboard has the following layout:

| A | B | C | D | E | F | G |

| H | I | J | K | L | M | N |

| O | P | Q | R | S | T | U |

| V | W | X | Y | Z | - | ' |

| SPACE BAR | ||||||

Assuming the cursor’s starting position is always on the letter A, we can easily write a function that takes a string as input and outputs the corresponding sequence of movement commands and ↩ RETURN presses to spell it out. From there, we know the cursor’s final position after typing the title, so we can issue a set of hardcoded commands to navigate to and select the “Search” button.

However, there’s a small quirk that needs to be accounted for. When the cursor is on any of the vowels, a submenu appears above the letter showing available diacritic options. In that state, issuing an ↑ UP command doesn’t move to the letter above. It instead moves the cursor into the diacritics submenu.

For example, if the cursor is on O and we want to move to H, a single ↑ UP won’t get us there. It will move to Ó instead.

The virtual keyboard with the diacritics submenu.

The fix isn’t as simple as issuing two ↑ UP commands instead of one when on a vowel, because there’s a sub-quirk: if the cursor is on a vowel and we press ✔ Enter, the diacritics submenu disappears. So the proper logic is:

Issue two

↑ UPcommands instead of one when the cursor is on a vowel and the previous command wasn’t✔ Enter.

A mouthful, I know.

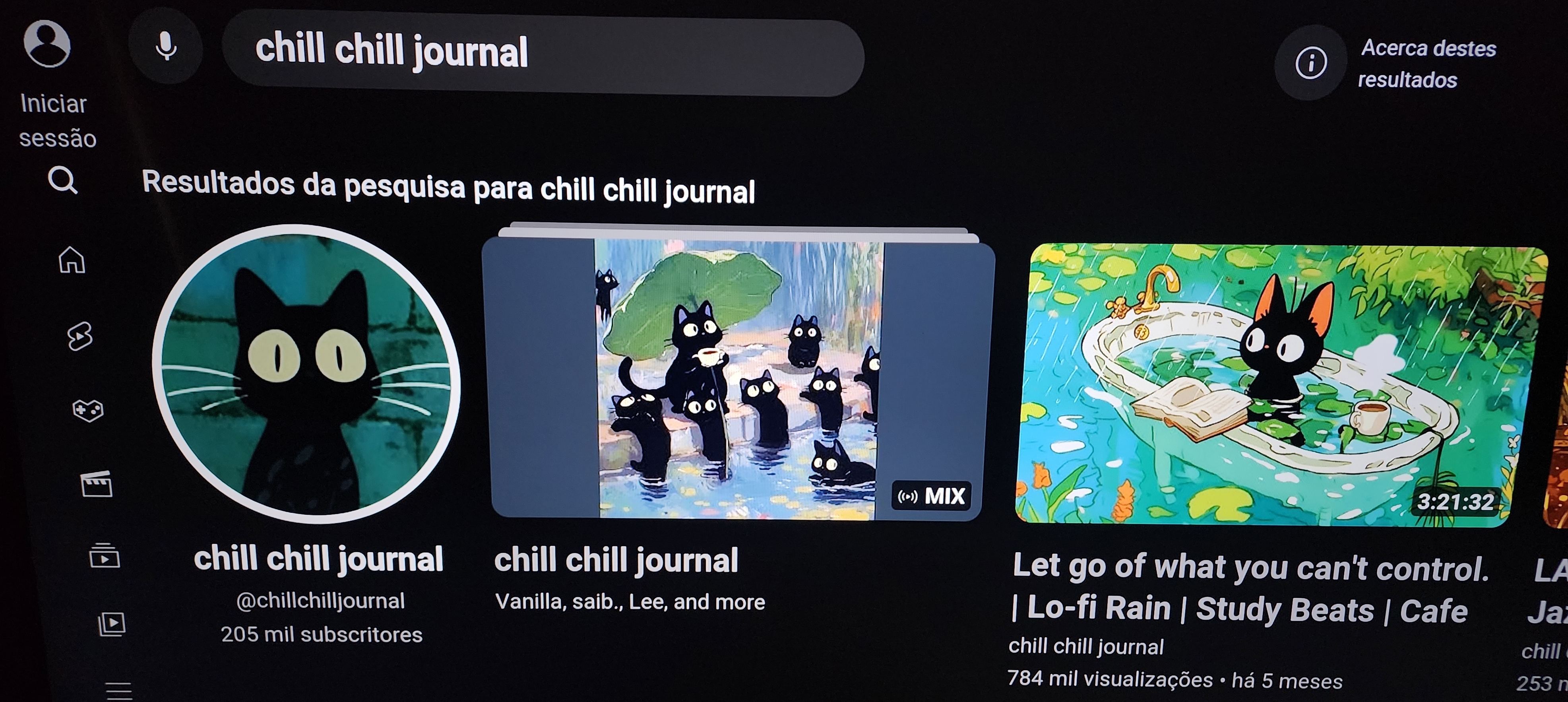

The Video Selection Problem

This is an issue I wasn’t able to fix, and I’m afraid it might be impossible.

After searching for the video, the script moves the cursor to the UI row containing the search results and randomly selects one of the first few items. The idea is that I don’t want to listen to the same four music videos every day, so picking related videos to mix things up is fine.

However, there are a few problems:

- Sometimes, the YouTube app will show sponsored videos in the search results. If those videos were truly relevant to my search, YouTube wouldn’t need to charge the authors to display them.

- Depending on the search term, YouTube may include the channel itself as a search result. That means we could accidentally open a channel instead of a video, so instead of waking up to music, I wake up to an open YouTube channel.

In this search result, the first option is a channel instead of a video.

Since the script is blind, there’s unfortunately no way (that I can think of) to work around this. We cannot discern which item is a video versus a sponsored link or channel. The only solution is to rely on probability: by choosing from a sample of 5 random items, we’re more likely to select a real video than a sponsored video or channel.

The Advertisement Problem

Lastly, there’s the issue of advertisements. As an avid uBlock Origin enjoyer, I’m accustomed to never seeing ads when I browse the web, including YouTube. However, installing ad-block software on Tizen OS is a bit of a hassle, so I’ve been putting off installing TizenTube on the TV.

To my disappointment (and ignorance), YouTube is absolutely riddled with ads, especially the TV version. I assume they crank up the ads several thousand notches because they expect people who watch YouTube on smart TVs to be used to the five-minute intermissions of cable television. I guess they are not entirely wrong, but I definitely don’t want to wake up to Raid: Shadow Legends instead of Nujabes.

Until I implement a more permanent solution (either installing TizenTube or setting up some kind of DNS-level ad-block) the current workaround is simple: after playing a video, wait 90 seconds before bringing the TV volume back up from zero (remember step one?) in case YouTube decides to serve an advertisement.

The Execution

If you’d like to see the source code, you can find it below. There are a few more features and quirks than I’ve covered here, but I’ll let anyone interested figure those out.

generate_actions.py

import os

import random

# ----------------------------

# Configuration

# ----------------------------

YOUTUBE_ID = '111299001912'

VIDEOS = [

'healing me morning lofi',

'chill chill journal morning',

'yawaraka jazz',

'the jazz hop cafe morning',

'vintage bossa nova instrumental',

'Lofi Tone Art morning',

'Nujabes mix'

]

FINAL_VOLUME = 7

AD_TIME = 90 # seconds

# ----------------------------

# Helper Functions

# ----------------------------

def keyboard_movements(text):

"""Generate remote key commands to type a string on the TV keyboard, including spaces."""

grid = [

['A', 'B', 'C', 'D', 'E', 'F', 'G'],

['H', 'I', 'J', 'K', 'L', 'M', 'N'],

['O', 'P', 'Q', 'R', 'S', 'T', 'U'],

['V', 'W', 'X', 'Y', 'Z', '-', "'"]

]

char_pos = {char: (r, c) for r, row in enumerate(grid) for c, char in enumerate(row)}

movements = []

cur_r, cur_c = 0, 0 # Start at 'A'

for char in text:

if char == ' ':

# Move down from bottom row to reach the space key

while cur_r < 3:

movements.append('KEY_DOWN')

cur_r += 1

movements.append('KEY_DOWN') # Extra down to reach space

movements.append('# New letter: <SPACE>')

movements.append('KEY_ENTER')

movements.append('KEY_UP') # Come back up to the main virtual keyboard

continue

target_r, target_c = char_pos[char.upper()]

# Vertical movement

while cur_r < target_r:

movements.append('KEY_DOWN')

cur_r += 1

while cur_r > target_r:

if grid[cur_r][cur_c] in {'O', 'U', 'I'} and movements[-1] != 'KEY_ENTER':

movements.append('KEY_UP')

movements.append('KEY_UP')

else:

movements.append('KEY_UP')

cur_r -= 1

# Horizontal movement

while cur_c < target_c:

movements.append('KEY_RIGHT')

cur_c += 1

while cur_c > target_c:

movements.append('KEY_LEFT')

cur_c -= 1

movements.append('# New letter: ' + grid[cur_r][cur_c])

movements.append('KEY_ENTER')

# Move to bottom row after finishing

while cur_r < 3:

movements.append('KEY_DOWN')

cur_r += 1

while cur_r > 3:

if grid[cur_r][cur_c] in {'O', 'U', 'I'} and movements[-1] != 'KEY_ENTER':

movements.append('KEY_UP')

movements.append('KEY_UP')

else:

movements.append('KEY_UP')

cur_r -= 1

return movements

# ----------------------------

# Action Builder

# ----------------------------

def main():

actions = []

# --- Power on ---

actions.append("# Power on TV")

actions.append("KEY_POWER")

actions.append("SLEEP_2")

# --- Mute TV ---

actions.append("# Mute TV")

actions.append("KEY_VOLDOWN")

actions.append("SLEEP_0.1")

# --- Launch YouTube ---

actions.append("# Launch YouTube")

actions.append(f"APP_RUN_{YOUTUBE_ID}")

actions.append("SLEEP_5")

# --- Navigate to search ---

actions.append("# Navigate to search")

actions.extend([

"KEY_ENTER",

*["KEY_RETURN"] * 10,

"KEY_ENTER",

"KEY_LEFT",

*["KEY_UP"] * 4,

"KEY_DOWN",

"KEY_ENTER",

"KEY_RIGHT",

"KEY_DOWN"

])

# --- Type search term ---

search_term = random.choice(VIDEOS)

actions.append(f"# Type search term: {search_term}")

actions.extend(keyboard_movements(search_term.upper()))

# --- Execute search ---

actions.append("# Execute search and open results")

actions.extend([

"KEY_DOWN",

"KEY_RIGHT",

"KEY_RIGHT",

"KEY_ENTER",

"SLEEP_1"

])

# --- Select a video ---

actions.append("# Select a random video from results")

for _ in range(random.randint(0, 5)):

actions.append("KEY_RIGHT")

# --- Play the video ---

actions.append("# Play the selected video")

actions.append("KEY_ENTER")

# --- Wait for ads ---

actions.append("# Wait for ads to finish")

actions.append(f"SLEEP_{AD_TIME}")

# --- Restore volume ---

actions.append("# Restore volume to final level")

for _ in range(FINAL_VOLUME + 1):

actions.append("KEY_VOLUP")

# --- Save to file ---

with open("actions.lst", "w") as f:

for action in actions:

f.write(f"{action}\n")

print(f"[*] actions.lst created with {len(actions)} lines (including comments).")

if __name__ == "__main__":

main()

execute_actions.py

import os

import time

import threading

from samsungtvws import SamsungTVWS

# ----------------------------

# Configuration

# ----------------------------

TV_IP = '192.168.1.64'

TOKEN = os.path.join(os.path.dirname(os.path.realpath(__file__)), 'tv-token.txt')

ACTIONS_FILE = os.path.join(os.path.dirname(os.path.realpath(__file__)), 'actions.lst')

# ----------------------------

# Helper Functions

# ----------------------------

def read_actions():

"""Read remaining actions from file, ignoring empty lines."""

if not os.path.exists(ACTIONS_FILE):

print("[!] actions.lst not found.")

return []

with open(ACTIONS_FILE, "r") as f:

lines = [line.strip() for line in f.readlines() if line.strip()]

return lines

def write_remaining_actions(lines):

"""Overwrite actions.lst with remaining lines."""

with open(ACTIONS_FILE, "w") as f:

for line in lines:

f.write(f"{line}\n")

def mute_tv(tv):

"""Bring volume down completely in the background."""

print("[*] Lowering volume in background...")

try:

for _ in range(100):

tv.send_key("KEY_VOLDOWN")

time.sleep(0.1)

print("[*] Volume muted.")

except Exception as e:

print(f"[!] Background volume thread encountered an error: {e}")

def execute_action(tv, action):

"""Execute one action. Raise an exception on failure."""

if action.startswith("#"):

print(f"[*] Comment: {action}")

return # Skip comments

elif action.startswith("SLEEP_"):

# Sleep action

try:

sleep_time = float(action.split("_", 1)[1])

except ValueError:

raise ValueError(f"Invalid sleep format: {action}")

print(f"[*] Sleeping {sleep_time} seconds...")

time.sleep(sleep_time)

elif action.startswith("APP_RUN_"):

# Launch app

app_id = action.split("_", 2)[2]

print(f"[*] Launching app: {app_id}")

tv.rest_app_run(app_id)

elif action == "KEY_VOLDOWN":

# Mute in background

print("[*] Starting background mute operation...")

threading.Thread(target=mute_tv, args=(tv,), daemon=True).start()

elif action.startswith("KEY_"):

# Regular key command

print(f"[*] Sending key: {action}")

tv.send_key(action)

else:

raise ValueError(f"Unknown action: {action}")

# ----------------------------

# Main Logic

# ----------------------------

def main():

print("[*] Starting action executor...")

# Connect to TV

try:

tv = SamsungTVWS(host=TV_IP, port=8002, token_file=TOKEN)

print("[*] Connected to TV.")

except Exception as e:

print(f"[!] Failed to connect to TV: {e}")

exit(1)

while True:

actions = read_actions()

if not actions:

print("[*] All actions completed!")

break

current_action = actions[0]

print(f"\n[*] Executing: {current_action}")

try:

execute_action(tv, current_action)

except Exception as e:

print(f"[!] Error executing '{current_action}': {e}")

print("[!] Halting execution. Try again later to resume.")

exit(1)

# Remove successfully executed line

remaining = actions[1:]

write_remaining_actions(remaining)

print(f"[*] Completed: {current_action}")

# Small buffer delay between actions

time.sleep(0.2)

print("[*] All done. No more actions left.")

if __name__ == "__main__":

main()

run.sh

#!/bin/bash

echo "============================================="

echo " Cron execution started at: $(date)"

echo " Starting TV automation (generate + execute)"

echo "---------------------------------------------"

# Paths

PYTHON_BIN="python"

SCRIPT_DIR="."

GEN_SCRIPT="$SCRIPT_DIR/generate_actions.py"

EXEC_SCRIPT="$SCRIPT_DIR/execute_actions.py"

# Generate the actions list

echo "[*] Generating action list..."

$PYTHON_BIN "$GEN_SCRIPT"

GEN_EXIT=$?

if [ $GEN_EXIT -ne 0 ]; then

echo "[!] Failed to generate actions.lst (exit code $GEN_EXIT)"

echo "============================================="

exit 1

fi

echo "[*] actions.lst generated successfully!"

echo "---------------------------------------------"

# Retry configuration

MAX_RETRIES=10

COUNT=0

# Execute with retries

while [ $COUNT -lt $MAX_RETRIES ]; do

echo "[*] Running execute_actions.py (attempt $((COUNT+1))/$MAX_RETRIES)"

$PYTHON_BIN "$EXEC_SCRIPT"

EXIT_CODE=$?

if [ $EXIT_CODE -eq 0 ]; then

echo "[*] Execution completed successfully!"

break

else

COUNT=$((COUNT+1))

echo "[!] Execution failed with exit code $EXIT_CODE"

if [ $COUNT -lt $MAX_RETRIES ]; then

echo "[!] Retrying in 3 seconds..."

sleep 3

else

echo "[!] Maximum retries ($MAX_RETRIES) reached. Giving up."

break

fi

fi

done

echo "[*] Automation complete!"

echo "============================================="

echo "" # extra newline for readability

At this point, I had a Python/Bash program that, when executed, makes my television play groovy songs. Awesome! Thinking the hard part was done, I started considering my options for triggering it automatically.

Had I had a server, the solution would be simple: bring the scripts to it, create a cron job for 06:50 every day, and let it execute run.sh. Easy peasy. However, I don’t have a home server. I do have a spare computer I could use, but I haven’t found enough utility in a server to justify the electricity cost to keep it running. So that was out of the picture.

I also considered buying a Raspberry Pi for this, but I had a better idea. I already have a device that’s always on my home network at 07:00 AM and is never turned off: my smartphone! I could just run the program there and have it interact with the TV in the morning.

Updated on 02/01/2026: I received a Raspberry Pi as a Christmas gift, so now I have the script running on it instead!

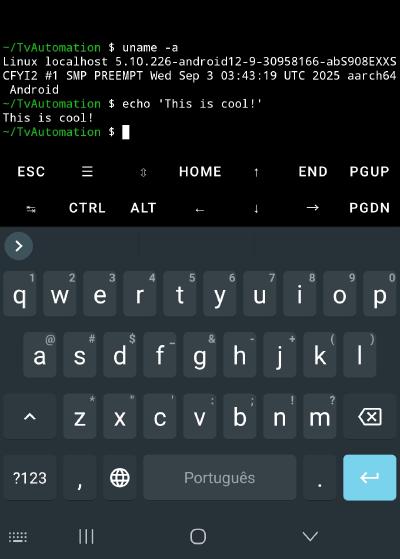

The first rabbit hole I went down was figuring out how to run Python on Android. I understand that Python programs aren’t meant to be compiled into executables, but I’d heard it was possible to convert them to C programs, so I figured I could do that and then take it from there into APKs. After a long time experimenting with Buildozer, I gave up on this approach.

Since I couldn’t turn my Python program into something else, I decided to run a Python interpreter directly on my phone. That’s when I found Termux, which is essentially a Linux environment on Android. It’s an awesome app that I’ll definitely keep on my smartphone regardless, but it was especially useful for this project. I copied the script files to the Termux file system, installed Python and other dependencies, and voilà! I had it running on my phone.

Running a Linux terminal on Android.

Then came the second rabbit hole: how do I schedule the run.sh file to run every day at 06:50? I thought this would be easy, but it turns out running scheduled tasks like this on Android is nearly impossible. Most vendors aggressively kill or suspend background apps to preserve battery life. I tried a bunch of solutions, including the paid app Tasker, but nothing worked.

In the end, the solution I settled on was to tweak some Android settings to make it as clear as possible to the OS that I don’t want Termux suspended, killed, or put to sleep. Then, I created a cron job inside the Termux Linux environment to execute the bash script every morning. So far, no problems with this approach!

Cron job executing run.sh.

Conclusion & Demo

Below, you can see a demonstration of the automation in action.

(In this video, my virtual keyboard pathfinding function had a bug that sent the cursor to the bottom row after each letter. This has now been fixed.)

The whole process is quite slow, taking up to around four minutes (the video is sped up by 4x) to the music start playing. In any case, since I can schedule it to start a few minutes before I wake up, this doesn’t bother me at all.

This was a fun project and definitely something I don’t have much experience with. I got a bit involved (or maybe one could even say obsessed) over the past few days, so I’m glad to have it finally finished and can now focus on my other equally time-consuming projects!